Performance and Scalability

Overview

Teaching: 30 min

Exercises: 30 minQuestions

How do we measure parallel performance?

Objectives

Learn how to measure parallel scaling.

Understand limits to parallel speedup.

Although speed is not the only reason one might be interested in parallel programming, speed is still a central consideration. If you want to solve larger problems using parallel computing, it won’t do if the parallel code runs slower than the sequential code.

Therefore it’s important to be able to measure and analyze performance.

Speedup

Parallel speedup is defined as the ratio of the runtime of the best sequential algorithm (on one processor) to the runtime of the parallel algorithm to solve the same problem on $N$ processors:

\[Speedup = \frac{T_s}{T_p}\]…where $T_s$ is sequential runtime and $T_p$ is parallel runtime. Sequential runtime is (usually!) longer than parallel runtime, so a larger speedup is better.

Optimally, the speedup from parallelization would be linear. Doubling the number of processing elements should halve the runtime, and doubling it a second time should again halve the runtime. That would mean that every processor would be contributing 100% of its computational power. However, very few parallel algorithms achieve optimal speedup. Most of them have a near-linear speedup for small numbers of processing elements, which flattens out into a constant value for large numbers of processing elements.

Efficiency

Efficiency is the ratio between the actual speedup and the ideal speedup obtained when using a certain number of processors. We just argued that the ideal speedup is proportional to the number of processors, $N$, so:

\[Efficiency=\frac{Speedup}{N}=\frac{T_s}{T_p*N}\]Efficiency can be also understood as the fraction of time for which the processors are doing useful work, or the fraction of the processors which are doing useful work on average.

- 0-100%

- Depends on the number of processors

- Varies with the size of the problem

When writing a parallel application we want processors to be used efficiently.

Amdahl’s law

We can think of a program or algorithm as having parallelizable and non-parallelizable parts. Parallelizable parts are those segments of the code that can execute on separate processors doing separate work. Non-parallelizable parts are those segments of the code which can only be done by one process, or equivalently, parts which do duplicate work if executed by more than one process.

Reading in a parameter which every process must have, for example, is a non-parallelizable operation. You can either have one process read, or you can have all processes read, but since all processes would be doing exactly the same work, no speed advantage would be gained by parallelizing the operation. Therefore input is a non-parallelizable part of most programs.

Amdahl’s Law takes this division of code into parallelizable and non-parallelizable parts to describe the maximum speedup of an algorithm.

Express the time to run the sequential code as the sum of the time to run the parallelizable fraction, $P$, and the time to run the non-parallelizable fraction, $S$ (for sequential):

\[T_s = T_s S + T_s P; S+P=1\]The time to run the parallel code is reduced by the number of processors $N$, but only for the parallelizable part. Furthermore, the parallel program may have to do certain operations that the sequential code does not. These operations might include, for example, setting up communications with other processes, or waiting for other processes to complete so that shared memory is consistent. We encapsulate these extra operations as parallel overhead, $K$:

\[T_p = T_s S + T_s \frac{P}{N} + K\]If we make the extremely optimistic assumption that $K$ is negligibly small and substitute the above into the definition of speedup, we get:

\[Speedup = \frac{T_s}{T_p} = \frac{1}{S+\frac{P}{N}}\]This equation is known as Amdahl’s law. It states that the speedup of a program from parallelization is limited by the fraction of the program that can be parallelized.

For example, if 50% of the program can be parallelized, the maximum speedup using parallel computing would be 2 no matter how many processors are used.

| Speed Up | Efficiency |

|---|---|

Amdahl’s law highlights that no matter how fast we make the parallel part of the code, we will always be limited by the sequential portion. Furthermore, if the parallel overhead $K$ is not actually small, that’s even worse news. It is entirely possible for the run time of a parallel program to be worse than the run time of the equivalent sequential program due to $K$.

This suggests that parallel computing is only useful when the number of processors is small, or when the problem is perfectly parallel, i.e., embarrassingly parallel. Amdahl’s law is a major obstacle in boosting parallel performance.

But notice that Amdahl’s law assumes that the total amount of work is independent of the number of processors, that is, the problem is the same size no matter how many processors we use. This type of scaling is referred to as Strong Scaling.

Gustafson’s law

It is very common, however, that we have some control over the size of our problem, and we are using parallel computing to handle larger problems than we can in sequential. For example, we might be doing some simulation on a grid where we can choose how fine to make the grid. Increasing the number of points on the grid increases (we hope) the precision of our results, but also requires more computing.

This scenario is called Weak Scaling:

If we can arrange that the total amount of work to be done in parallel varies linearly with the number of processors, speedup will be given by Gustafson’s law, shown here without derivation:

\[\large{Speedup = N − S * (N − 1)}\]where $N$ is the number of processors and $S$ is the sequential fraction as before. (We also continue to assume that the parallel overhead $K$ is negligible.)

| Speed Up | Efficiency |

|---|---|

The theoretical speedup is more optimistic in this case. We can see that any sufficiently large problem can be solved in the same amount of time by using more processors. We can use larger systems with more processors to solve larger problems.

Example problem with weak scaling.

Imagine that you are working on a numeric weather forecast for some country. To predict the weather, you need to divide the whole area into many small cells and run the simulation. Let’s imagine that you divided the whole area into 10,000 cells with a size of 50x50 km and simulation of one week forecast took 1 day on 1 CPU. You want to improve forecast accuracy to 10 km. In this case, you would have 25 times more cells. The volume of computations would increase proportionally and you would need 25 days to complete forecast. This is unacceptable and you increase the number of CPUs to 25 hoping to complete the job in 1 day. Gustafson’s Law says you have some reasonable hope of it working.

Example problem with strong scaling.

Imagine that you want to analyze customer transactional data for 2019. You cannot add more transactions to your analysis because there was no more. This is fixed-size problem and it will follow strong scaling (Amdahl’s) law.

Any real-life problem falls in one of these 2 categories, although it is not always practical to maintain a precise linear proportion between problem size and number of processors. The type of scaling is a property of a problem that cannot be changed, but understanding what kind of scaling to expect helps us make sensible choices about the number of processing elements so that we use them efficiently.

Measuring Parallel Scaling

It is important to measure the parallel scaling of your problem before running long production jobs.

- To test for strong scaling we measure how the wall time of the job scales with the number of processing elements (openMP threads or MPI processes).

- To test for weak scaling we increase both the job size and the number of processing elements.

- The results from these tests allow us to determine the optimal amount of CPUs for a job.

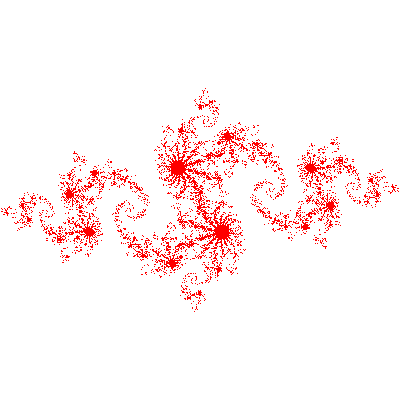

Example: Generating an image of a Julia set

The example program julia_set_openmp.c calculates an image of a Julia set. It is adapted from one written by John Burkardt and released under the GNU LGPL license.

The program finds a set of points in a 2D rectangular domain with width W and height H that are associated with Julia set.

The idea behind a Julia set is to choose a complex constant $c$, and then for each point $z_0$ in a complex domain, repeatedly evaluate this expression:

\[z_{n+1}=z_{n}^2+c\]If the sequence of values $z_{n}$ remains bounded, the initial $z_0$ is in the set; if the sequence diverges, the initial $z_0$ was outside the set. For each complex constant $c$ one gets a different Julia set.

To construct the image, we take rectangular region of pixels (x,y) and map each pixel to a complex number: $z_0=x+iy$. Each pixel then represents a starting point $z_0$ for the series $z_{n}$. We start computing the series, and if $\lvert z \lvert$ grows past some large number (1000 in this implementation) we conclude it’s outside the set and color it white. If it doesn’t diverge in a reasonable number of steps (200 in this implementation), we conclude it’s inside the set and draw it in red.

The program takes the width and height of the image (in pixels) and the number of parallel threads as arguments. It will print the number of threads and computation time on screen. It’s a good idea to repeat each computation several times to observe how much variance there is.

Running strong and weak scaling tests

We can run this sample program in both strong and weak scaling modes.

To test the strong scaling run the program with fixed size (width=2000, height=2000) and different numbers of threads (1-16).

To measure weak scaling we run the code with different numbers of threads and with a correspondingly scaled width and height.

Once the runs are completed we fit the strong and weak scaling results with Amdahl’s and Gustafson’s equations to obtain the ratio of the sequential part (s) and the parallel part (p).

Compiling and Running the Example

- Connect to a cluster (if you haven’t already), with X11 forwarding enabled so that you’ll be able to display some graphs.

- If you’re on Windows using MobaXterm, check that the “X” icon in the upper right-hand corner is colored.

- If you on a Mac, start XQuartz, then start a terminal and log in with

ssh -X username@cluster...The uppercase-Xturns on X11 forwarding.

- Download and unpack the code:

wget https://acenet-arc.github.io/ACENET_Summer_School_General/code/julia_set.tar tar -xf julia_set.tar cd scaling - Compile the program

julia_set_openmp.cgcc -fopenmp julia_set_openmp.c - Run on 2 CPUs (for example)

./a.out 200 200 2JULIA_OPENMP: C/OpenMP version. Plot a version of the Julia set for Z(k+1)=Z(k)^2-0.8+0.156i Height, width, threads, seconds: 200, 200, 2, 0.0103905 TGA_WRITE: Graphics data saved as 'julia_openmp.tga' JULIA_OPENMP: Normal end of execution.The program generates image file

julia_openmp.tga, which you may be able to see usingdisplay julia_openmp.tga. (You might have to turn on X11 graphics first if you get the errordisplay: unable to open X server) - To measure strong scaling submit array job:

sbatch submit_strong.sh#!/bin/bash #SBATCH --cpus-per-task=16 #SBATCH --time=5 #SBATCH --mem-per-cpu=1000M for nthreads in $(seq 1 16) do for replicate in 1 2 3 do ./a.out 200 200 $nthreads done done - Extract the scaling data from the raw output.

The data we need are in the

slurm-1234567.outfile. (Make sure the job ID matches the job you just submitted; you might do this more than once!) We need the thread counts and the run times, which you can extract with a utility likegreporawk:awk '/threads, seconds:/ {print $7, $8}' slurm-1234567.out > strong-1234567.csv - Fit the data with Amdahl’s law.

The python script will plot the data, and try to fit Amdahl’s law to the data

by finding a sequential fraction $S$ that fits best.

(To see the graph you may need to have an X11 server running and X11 forwarding enabled.)

module load python scipy-stack python strong_scaling.py strong-1234567.csvThe Python script will also save the figure as “strong_scaling.svg”, and you can re-display it using

display strong_scaling.svg.

Testing weak scaling

Find the

submit_weak.shjob script in thescalingdirectory you recently unpacked. It’s incomplete: Replace the....s with suitable numbers to evaluate Gustafson’s Law (the weak scaling law) like we just evaluated Amdahl’s law. What is the problem size in this example? How should it relate to the number of threads?Submit the job. When it has run, extract the data as we did above and use the

weak_scaling.pyscript to plot the data and a best-fit line under the assumptions of Gustafson’s law.Are your graphs smooth? If not, why do you suppose that might be?

Compare the speedups obtained using strong and weak scaling tests. Are they what you expected?

Compare the “serial fraction” estimated in each test. Are they the same? Why or why not?

Solution

Remember that Gustafson’s Law models the case where the amount of work grows in proportion to the number of processors. In our case, the problem size is the number of pixels and we use a thread for each processor. So to properly evaluate weak scaling in this example you must keep the product of the height and width in direct proportion to the number of threads.

One simple way to do this is to scale only the width while keeping the height constant, or vice versa. This is valid and should give you good results. Or you can carefully choose heights and widths in pairs that give proportional products, like 1000x1000 for one thread, 2000x3000 for 6 threads, and 4000x4000 for 16 threads. Also valid, although perhaps more error-prone.

Below we show a script that scales both height and width by the square root of the thread count, so that their product remains in direct proportion to the thread count and the aspect ratio of the image remains constant. It uses a couple of shell utilities which you may not have seen before,

bcandprintf.#!/bin/bash #SBATCH --cpus-per-task=16 #SBATCH --time=5 #SBATCH --mem-per-cpu=1000M wbase=2000 hbase=2000 for nthreads in $(seq 1 16) do width=$(printf '%.0f' `echo "scale=6;sqrt($nthreads)*$wbase" | bc`) height=$(printf '%.0f' `echo "scale=6;sqrt($nthreads)*$hbase" | bc`) echo "height x width: $height x $width" for replicate in $(seq 1 3) do ./a.out $height $width $nthreads done doneThere are a lot of factors that can affect run time, not all of which are easily controlled in a shared computing environment. For example, other processes running on the same machine as your test might affect the run time.

Scheduling Threads in OpenMP

In the OpenMP module later in this summer school we’ll describe how you can affect how OpenMP divides up parallel work using the ‘schedule’ directive. Here’s a preview:

The schedule refers to the way the work chunks are spread across threads. A static schedule means that it is decided very simply when the loop begins which thread will handle which iterations of the loop. A dynamic schedule means that each thread will work on a few iterations and then take the next chunk which hasn’t been taken by another thread. The latter allows better balancing if the work varies between different iterations, but requires some communication overhead.

Change ‘schedule(dynamic)’ to ‘schedule(static)’ at line 140 in julia_set_openmp.c, recompile the code, and rerun the strong scaling test. Compare test results with dynamic and static scheduling.

Which performs better for this Julia set calculation? Why do you suppose that is?

References:

- Amdahl, Gene M. (1967). AFIPS Conference Proceedings. (30): 483–485. doi: 10.1145/1465482.1465560

- Gustafson, John L. (1988). Communications of the ACM. 31 (5): 532–533. doi: 10.1145/42411.42415

Key Points

An increase of the number of processors usually leads to a decrease in efficiency.

An increase of problem size usually leads to an increase in efficiency.

A parallel problem can often be solved efficiently by increasing the number of processors and the problem size simultaneously. This is called “weak scaling”.

Not every problem is amenable to weak scaling.